What is Agency, Why AI Agents Lack It, and Why You Should Hire for It

ChatGPT has come out, and the whole AI industry has jumped headfirst into LLMs.

Back in the day, early models like GPT-3 were like sentence finishers on autopilot. Handy, but not exactly mind readers. Modern LLMs, though? They’re built for instructions. You say the thing, they do the thing.

Need an email, report, or essay? Done. With the right plugins, they can now search docs, generate images, and even poke around your desktop like a helpful little robot assistant.

And one area where they also shine? Writing code.

Copilots, teammates, and the road to autonomy

During pretraining, LLMs are fed enormous amounts of code, allowing them to learn syntax and best practices for producing useful, working code.

To assess the real-world usefulness of LLMs on coding tasks (and their potential economic impact), the SWE-bench Lancer dataset was introduced this year. It features over 1,400 freelance software engineering tasks sourced from Upwork, representing a total of $1 million in actual payouts. On this benchmark, the Claude 3.5 Sonnet model managed to “earn” $400,000 worth of tasks.

This kind of performance isn’t just theoretical – LLMs are already making their way into everyday development. Today, software engineers are using LLMs through tools like Copilots to assist with writing, reviewing, and understanding code.

Copilots have access to the entire codebase, allowing them to provide valuable insights and intelligent code completions to engineers.

As LLMs have shown, they’re pretty good at coding, the bar has been raised. Enter Devin AI – a company on a mission to build an AI teammate that doesn’t just help write code, but does the whole software engineering gig. We’re talking writing code, fixing its own bugs, Googling docs like a pro, and even testing the app it just built. It’s basically trying to be that one super-productive teammate who never takes coffee breaks.

And Devin’s not alone – these big dreams are starting to catch on with industry leaders everywhere. CEO of Anthropic, Dario Amodei, says AI will write all code for software engineers within a year. Meta CEO Mark Zuckerberg claims AI will replace mid-level engineers.

These ambitions have not gone unnoticed, and software engineers are beginning to wonder when they will be replaced.

But that day isn’t here yet. The reason?

AI agents still lack some essential qualities that make software engineers – and people in general – truly capable, like real agency. Turns out, there’s more to being a good engineer than just writing code.

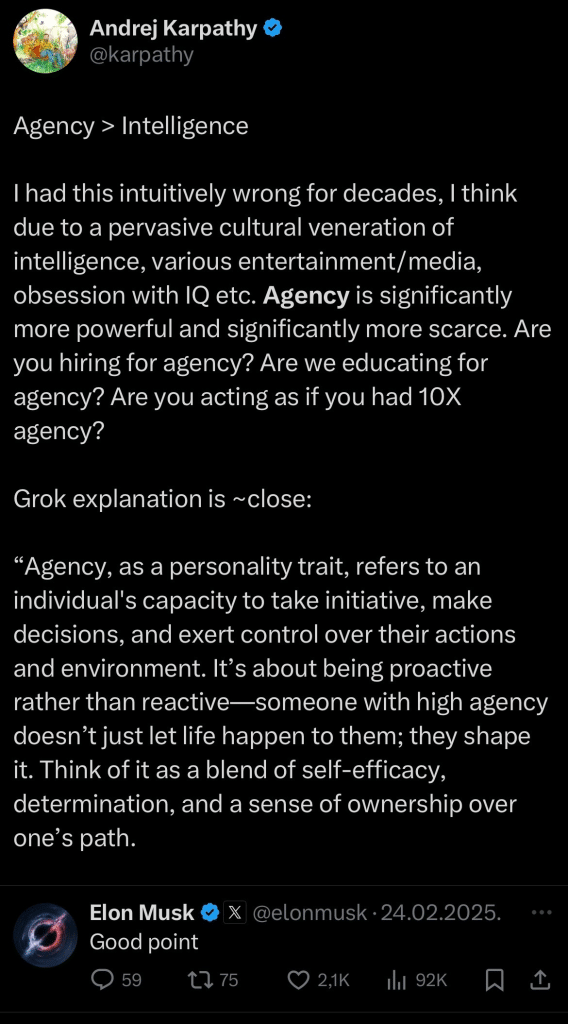

What is agency?

Agency is typically defined as the ability of an individual to make meaningful choices and act on them in ways that influence their life and environment.

Key ingredients of agency? Autonomy, intentionality, capability, and a sprinkle of responsibility!

Individuals with high agency are intrinsically motivated. They believe they have the capability to take proactive action toward their goals and feel responsible for their success. They don’t rely on outside input or instructions – they find their own path.

On the contrary, individuals with low agency tend to be more passive, relying on constant external stimuli to take action. For them, life feels more shaped by fate and luck than by their own decisions.

Software engineers are selected for their technical skills, soft skills, and cultural fit, but proactiveness and autonomy – agency – are just as important. Engineers with high agency are goal-driven, problem solvers who add great value. They stay ahead of tech trends and often shape, rather than just fit into, company culture.

Software engineers are expected to have agency to do their jobs well. So, if AI is going to take over, it better have some agency, too! Let’s see if it has what it takes.

AI that thinks before it speaks

Most LLMs today are of an instruction-based nature. You can ask them a question, and they will provide a detailed answer.

The first well-known example of this type of LLM was the GPT-3.5-turbo model, more famously known as ChatGPT. Over time, these models have improved significantly at answering questions.

Today, some of the most capable instruction-based LLMs include GPT-4.5 from OpenAI, Gemini 2.5 Pro from Google, Grok 3 from xAI, and Deepseek V3 from Deepseek.

These LLMs are good at answering questions, but for harder problems that require multiple steps of reasoning, they are used with Chain-of-Thought (CoT) prompting.

To encourage thinking and gradual progress toward answers to more difficult problems, LLMs have shown great performance when instructed with the CoT prompting technique. When instructing the LLM to solve a problem, we ask it to think step-by-step, which boosts the LLM’s performance. When answering a user’s problem, LLMs now break their answer into multiple steps, increasing the likelihood that they will not overlook something and will arrive at a true answer to the problem.

As this technique has proven beneficial, the industry has also come up with new types of models – reasoning models. These LLMs are natively trained to think before providing a final answer to the user. Once given a problem, the model begins thinking out loud about its reasoning process and, after arriving at a conclusion, presents the final answer to the user.

Examples of these models include O1 from OpenAI, DeepSeek R1 from DeepSeek, and Gemini 2.0 Flash Thinking from Google.

Say hello to Agent

So, CoT prompting and reasoning have enabled LLMs to solve complex problems, but in order for them to take actions or observe results to solve broader issues, like checking the weather in your town or placing an order, we need to give them tools.

A system that can take actions to solve a user’s problem is considered an Agent.

To help LLMs become AI agents, a novel ReAct pattern was introduced along with tools, giving them the ability to think and act more dynamically.

LLMs are instructed to think in cycles of Thought, Action, and Observation. When given a problem, the LLM first reasons about what to do (Thought), then outputs an instruction (Action) that an external program can interpret and execute.

For example, the action might be an API call or a simple calculation. Once the action is carried out, the result is returned to the LLM (Observation), which it uses to decide on the next step (another Thought). This cycle repeats until the AI agent completes the task.

AI agents have been gaining popularity lately, and much of the industry is racing to build practical, helpful versions. One of the most talked-about types is the desktop-controlling agent. Given a task (say, booking a flight to Spain) these agents can perform real actions on your desktop that lead to actual results, like a confirmed ticket.

Notable examples include Operator from OpenAI and Computer Use from Anthropic.

If AI agents can now perform actions on behalf of software engineers – and even see their desktops – what’s stopping them from fully replacing engineers in everyday tasks? The main limitation is agency.

What’s stopping AI from being like humans?

AI agents still haven’t reached the level of agency that human software engineers possess. They continue to lack several key qualities that define true agency in individuals:

- Full autonomy – AI agents need external instructions and inputs to complete a task. They aren’t yet capable of discovering value on their own or pursuing goals without being explicitly told to. To reach true autonomy, they’d need the ability to initiate meaningful action independently.

- Sensing the full environment – They still lack the ability to perceive the world like humans do. Full agency would require access to all human senses and the ability to act on them – through speech, physical actions, and even emotional understanding.

- Intentionality – AI agents only begin acting when a user prompts them. We haven’t yet discovered a way to give them a built-in value system that would guide them toward universal goals and push them to act on their own initiative.

- Capability – To be fully capable, AI agents would need more than just data -they’d need the full range of human senses and the power to interact with the world in complex ways.

- Responsibility – AI agents can correct their mistakes and even apologize, but they don’t truly carry the weight of responsibility. They still require humans to guide, initiate, and finalize their tasks.

Agency = AGI?

Until AI agents fully develop the capabilities tied to human agency, they won’t replace software engineers. Instead, they’ll remain invaluable tools, not complete teammates.

Although AI agents have made great progress in automating tasks – clicking buttons, booking tickets, and more – they still lack true agency. They follow instructions effectively, but they’re not yet capable of independently setting goals, adapting to new situations, or thinking on their feet.

Real agency would be more than just an upgrade – it would signal a leap toward Artificial General Intelligence (AGI), and we’re not quite there… yet.