From file frustration to streamlined data exchange: Rethinking the approach

Occasionally, everyone needs to handle data stored in files. CSV files seem like the way to go and are often the first choice – but I’d rather call them an anti-pattern.

Let’s say a customer requests an Excel report containing specific data. If this is a one-time thing, we can conveniently send it via email. In most cases, however, the data needs to be sent regularly. Not all clients use sophisticated software like Kafka; even when they do, we don’t always have access to it. So we need a solution that is both universally available and easily understood. SFTP and CSV files seemingly fit the bill.

This solution seems optimal for sending data to an external client. The problem arises when an internal application needs to integrate with ours, requires some data, and we decide to solve it by exporting CSVs. At first glance, this seems a low-hanging fruit.

Everyone can download a file.

Everyone can read a CSV.

It’s already developed.

While it may appear that simple, ultimately, it proves to be more costly than using a dedicated component for data exchange. I learned my lesson the hard way. I’ve worked on both applications that sent and received data via files. Here are some of the issues I discovered along the way.

Data storage

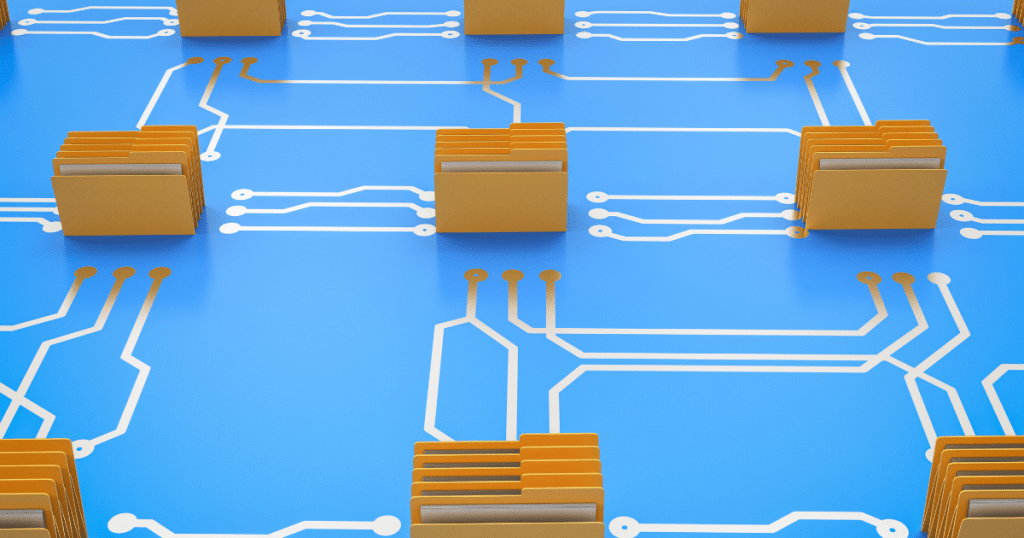

The first thing we need is a place to store the data. Since our data is stored in files, it must be placed within a file system. The decision we face is whether to store the files on the producer machine, consumer machine, or an intermediary machine. Each option has its advantages and disadvantages.

Using the producer machine results in the consumer bearing the entire networking load. The consumer will need to periodically retrieve the data through a PULL operation. In the event of a network link failure, the producer will remain unaffected.

However, this solution has a drawback – when consumers are redundant, and there are multiple instances, each instance may attempt to download the same file. Consequently, the same data will be processed multiple times. To address this, each instance should reserve the file before initiating the download. This can be accomplished by appending a suffix to the file name or moving the file to another folder. Once the file is reserved, the consumer application can proceed with the file download.

Using the consumer machine puts all the networking burden on the producer. The producer needs to PUSH the data to the consumer machine once the file is created. The consumer machine can watch the local file system for changes and react when a new file is created. As a result, the push-based approach can yield a faster response by the consumer and does not put any networking burden on the consumer. Since the file is sent directly to the consumer instance, this approach does not need file reservation.

Pushing files to consumers also has a considerable downside – the producer must balance these files between the consumer instances. Implementing effective client-side balancing is a tough nut to crack. The producer would need to adapt its balancing strategy when a consumer instance gets offline or online and is added to or removed from the group.

Using an intermediary machine combines elements of both previously mentioned approaches. It eliminates the need for client-side balancing, but the consumer still needs to reserve the file before downloading it. The data storage is independent of both the consumer and producer, although both parties are still required to transfer files over the network.

Data retention

Regardless of the chosen method for storing data, it is crucial to ensure that the disk is not filled with files. Failing to do so will render the machines (and the services running on them) unusable. The primary line of defense is to store files on a separate section of the disk.

Even if the partition gets filled with files, it will not impact other services. This advice applies even when files are stored on an intermediary machine, as some operating system functionalities may not work properly if the disk space is occupied.

Accumulating files can happen quite easily. For instance, the consumer application may be down (or not deployed) while the producer application continues to generate files. It can also occur if the producer generates a high volume of data the consumer cannot keep up with.

In such cases, it is necessary to prevent the files from overflowing the disk. If the data represents events and skipping a few events in the event of a failure is acceptable, a retention policy should be implemented.

For example, a script that periodically deletes the oldest files can be developed. This script adds another component to the architecture that needs to be created, maintained, and monitored. If the script malfunctions or contains a bug, the data retention may not work as intended.

Data processing

A file is removed from the disk when a consumer has read and processed the whole file content. The ‘whole file content’ is emphasized since a file represents a batch of data. Errors or failures can happen at any point between parsing and processing the data. If you delete the file without processing all the data contained within it, the data will be lost. On the other hand, if you do not delete the file but have already processed some of the data, you will end up processing the same data twice. This poses a data integrity concern that needs to be addressed. Both discarding data and processing data twice are undesirable and potentially unacceptable. That is why it is necessary to process a file in its entirety before deleting it.

This can be challenging, especially if the application involves multiple processing steps, including several database writes. Errors occurring in any of these phases can result in the entire batch being reversed. Additionally, processing a batch of data within a single transaction restricts the data processing to only one thread. File reading and data processing should be performed in separate threads to optimize performance.

One possible solution is to relax the data integrity requirement by implementing a queue where all the data is stored after parsing. This approach considers a file processed once all its records are inserted into the queue. Records can then be dequeued one by one, preventing the entire batch from being reverted. The issue with this approach is that the data in the queue will be lost in the event of an abrupt application failure. Since abrupt application failures are expected to be rare, this trade-off may be acceptable. If preserving data is not acceptable, a persistent queue for the data can be introduced (but we opted out of using new components in favor of using simple CSV reading).

It is also important to set a reasonable limit to the number of records in a file. A large file might present a huge load of data at once. So huge that it might crash the data if it does not have the memory to read the whole file.

Data Sharing

Once everything is set up and all the monitoring points are implemented (excluded from this article), we can enjoy our manually implemented file-based data exchange solution… until a new consumer is needed for the same data.

Fortunately, we already have this functionality (file download+reservation, data retention, data queuing, monitoring, etc.) supported, and we can easily re-use it in any new consumer. Hopefully, the code has been written well enough to be copied and pasted into a library. Otherwise, there will be significant code duplication. Let’s not forget to create documentation for the module so that the solution can be integrated effortlessly.

Once we move this code to a module, our file-based data exchange solution will become a reusable component that any application can utilize (provided it is written in the same programming language). The only thing left is instructing the producer to export the files to a new location. There might be some duplicated data between the two locations, but let’s hope we will not add many of these consumers to the system.

Data storage already handles this – and it does it better

These are only some of the issues that we encountered. There are more of them, some not even mentioned here, and others are yet to be discovered. To resolve them, we had to invest time in development, refactoring, and as a result, we now have a code base to maintain.

But these problems were not unique to us. They were common problems that most data storage software handles out-of-the-box and does it better!

The selection of software depends on the nature of the data. In our case, the data were events, and the producer application performed some batch processing on it. A more suitable storage option than the one we have been using would be a messaging system:

· Producers and consumers would write and read from a topic or a queue.

· Data would be stored and replicated to another machine, also enabling Geo-redundancy.

· Retention would be managed by the platform, eliminating the need for manual scripts.

· Messaging systems have a commit feature to indicate that a record (or batch) has been processed.

· Some messaging systems support consumer groups, allowing concurrent processing out-of-the-box.

· Different consumers (applications) can be subscribed to the same topic.

· Applications can be written in the most popular programming languages.

· Monitoring tools for popular systems are already available.

· Popular systems are usually well-documented.

For other types of data, the solution might be a more suitable data store like Redis or Postgres. It all depends on what the data is about and how it can be presented.

Exchanging data via files should be considered an anti-pattern and only be used when other approaches cannot be implemented (like when integrating with a legacy system). Each time some data is exchanged using files, a red flag should pop up, and you should ask yourself – what is a better way to do this? There probably is.