How to Compress Prompts with Transformer Attention

When working with large language models, long prompts are often a necessary evil. You want to give the model enough context to get a good response but that comes at a cost: more tokens, slower inference, and higher compute usage.

At some point, I started wondering: do we really need all these words?

Most prompt compression tools out there use another language model to rewrite your input. This approach kind of defeats the purpose if you’re trying to save on resources. It’s like asking one expensive model to help another expensive model do the job slightly faster.

That is why my team and I tried a different approach.

Instead of generating shorter prompts with another model, I built a method that selects the most important words using attention scores from BERT. No generation, no fine-tuning, just raw attention weights, entity detection, and a few rules to decide what to keep.

The result is a lightweight, interpretable prompt-compression method that works without relying on another LLM.

In this post, I’ll break down how it works, show a few before-and-after examples, and share what I learned along the way.

Slimming your prompts actually matters

Working with LLMs often seems straightforward. Yes, they can understand and generate impressive responses, but once you start chaining prompts, injecting long contexts, or passing in full conversation histories things start to break.

At first, the issues show up as a subtle lag, then you hit token limits, and eventually, your API costs spike, or your app slows to a crawl because you’re feeding massive prompts into a model that wasn’t designed to handle them efficiently.

This isn’t just a theoretical issue, in real-world systems every token matters.

More tokens mean:

- Slower inference (because transformer attention scales quadratically with input length)

- Higher memory usage (especially with large batch sizes)

- And in many cases, higher cost (if you’re running on commercial APIs or hosting large models)

There’s also the environmental sustainability cost that comes with overusing LLMs, which is another reason why prompt compression matters.

Compressing prompts means doing more with fewer tokens and that translates to less compute, lower energy consumption, and ultimately a smaller carbon footprint. At scale, these optimizations add up. As the AI community becomes more sustainability-focused, lightweight solutions like this are not only technically interesting but also a step in the right direction.

There are already some smart solutions out there. Techniques like sparse attention, selective context injection, and LoRA-based optimization all try to reduce memory or computation load. But when it comes to compressing the actual prompt most methods still rely on using another LLM to do the rewriting. Tools like LLMLingua and Compress-GPT fall into this category.

And while those work to some extent, they come with trade-offs:

- You’re still paying inference cost for the compression model

- You can’t easily explain why certain tokens were kept or removed, as you are using LLM as a black box

- And in latency-sensitive systems, even one extra model call can be too much

That’s where our approach comes in – a method that skips the second LLM entirely, and uses something we already have access to: attention scores.

Let’s go through it step by step

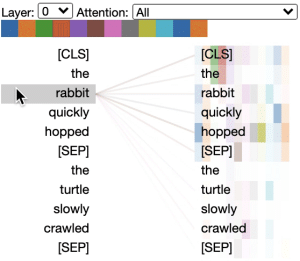

The core idea is simple: if attention tells us which tokens are most important in a sentence, then maybe we can use those scores to decide which words to keep and which to drop.

We built a custom compression function based on this idea, using BERT as our underlying model. Unlike most LLMs used for generation, BERT is bidirectional and relatively lightweight making it well-suited for analyzing text without having to generate anything new.

Our goal wasn’t to rewrite the input but to select the most informative words and remove everything else. No decoding, no generation, no extra inference calls. Just one model pass, and a series of filtering steps.

Here’s how the full pipeline works at a high level:

- Preprocessing: We tokenize the input using BERT’s tokenizer and run Named Entity Recognition (NER) to highlight important concepts like names, locations, or organizations.

- Attention Scoring: We extract multi-layer attention weights from BERT to measure token importance and contextual relevance.

- Token Selection: We combine the scores with entity boosts and apply filtering rules to keep only the most meaningful words.

- Reconstruction: The selected tokens are joined in their original order to produce a compressed version of the prompt.

The result is a short, focused version of the original input that preserves key meaning while cutting down token count by more than half in many cases.

What makes this approach different is that it’s fully interpretable. You can trace exactly why each token was kept which opens up interesting directions in explainability and trust for LLM-driven systems.

How the compression algorithm works

1. Preprocessing

We start by tokenizing the input text using the BERT tokenizer, which breaks the sentence down into subwords. Alongside that, we run Named Entity Recognition (NER) to identify important entities like people, places, organizations, etc. These entities will get a score boost later on, since they often carry key meaning.

2. Attention Scoring

This is the heart of the algorithm. Here’s how we extract and process attention scores:

- BERT outputs multi-head self-attention for each layer. That means each token has a set of attention scores for every other token across all heads and layers.

- We average attention scores across heads for each layer to simplify things.

- Then we weight deeper layers more heavily, since later layers in BERT tend to capture higher-level semantic relationships. We use an exponentially weighted average across all layers to prioritize that.

- For each token, we calculate a Base Attention Score by averaging its attention to all other tokens across the model. This gives us a general importance score per token.

- Next, we identify a Key Token (the one with the highest base score).

- We then compute a second score: how much each token attends to the Key Token. This captures contextual importance relative to the sentence’s core idea.

- Finally, we calculate the Final Score for each token as a weighted mix:

- 60% from the base attention score

- 40% from attention to the key token

- Plus a 50% boost for tokens near the key token, within a small window to preserve local context

3. Word Selection

Once we’ve scored the tokens, we need to convert subword tokens back into full words:

- We group subwords into whole words and average their scores.

- Named entities get a 3× score boost, since we usually want to preserve those.

- Stopwords are filtered out entirely, unless they’re part of an important phrase.

This gives us a list of scored, full words ranked by how important they are in the sentence.

4. Compression

Now we decide how aggressively to compress:

- You can use either a fixed compression ratio (e.g., “keep 40% of the original words”) or a percentile threshold (e.g., “keep all words scoring above X”).

- The key token is always kept, no matter what.

- If part of a named entity was dropped, we add it back to maintain completeness.

This ensures that the final output doesn’t lose core meaning, even in very compressed cases.

5. Output

Finally, we reconstruct the compressed prompt by reordering the selected words into their original sequence. We don’t generate anything new just filter and reassemble the most informative pieces of the original input.

This method gives you a compressed prompt that:

- Preserves key meaning

- Cuts down token count significantly

- Doesn’t rely on another LLM

- And gives you visibility into why each token was kept which is rare in this space

Smart prompt compression in action

To see the algorithm in action, let’s look at a simple example.

Given the input: “Do you happen to have details about what countries are located near Egypt?”

The compressed output becomes: “countries located near Egypt”

Most of the filler words and question phrasing are removed, while the core meaning is preserved. The model identified “countries” as the key token, and prioritized tokens like “located,” “near,” and “Egypt” based on their attention to it. This is a simple case, but it shows how the method keeps the essence of the prompt without relying on rewriting or generation.

This is still a work in progress, but early results are promising. We compared our method against a few existing compression approaches including GPT-4o-mini, LLMLingua, Sentence Compression, and DeepSeek while focusing on two key metrics: token reduction and task accuracy.

In terms of compression, our approach consistently reduced token count by more than half, making it one of the most effective extractive methods we tested. While GPT-4o-mini was slightly more aggressive, our method outperformed others like LLMLingua and Sentence Compression in terms of token savings.

Accuracy-wise, there’s still room for improvement. Sentence Compression performed slightly better in downstream accuracy on average, but our method still held up well, performing significantly better than DeepSeek and other smaller fine-tuned models.

As we continue refining the scoring logic, exploring dynamic compression ratios, and integrating semantic similarity checks, we expect both performance and quality to improve further.

Why this matters?

Prompt compression is becoming essential as LLMs are integrated into real-time systems, cost-sensitive applications, and environments where performance and transparency matter. Our approach shows that it’s possible to reduce prompt length significantly without relying on another large model, while still keeping the logic interpretable and lightweight.

That said, this is just the beginning. We’re exploring ways to improve accuracy through better scoring functions, adaptive compression strategies, and integrating semantic similarity checks to ensure that compressed prompts still match the intent of the original. We also see potential in using this technique as part of explainability pipelines, helping us better understand how LLMs interpret inputs.

Prompt compression sits at the intersection of efficiency, usability, and trust in LLM systems. By leveraging transformer attention instead of relying on additional models, we’ve taken a step toward faster, cheaper, and more interpretable language model workflows. While our method is still evolving, it opens up new possibilities for scaling LLM-based applications without compromising on clarity or control.