Shawn Wang on the rise of the new role: AI engineer

According to Shawn Wang, founder of Smol.ai, the role is that of an AI Engineer, as he explained in his keynote speech “Software 3.0 and The Emerging AI Developer Landscape” at the Shift conference.

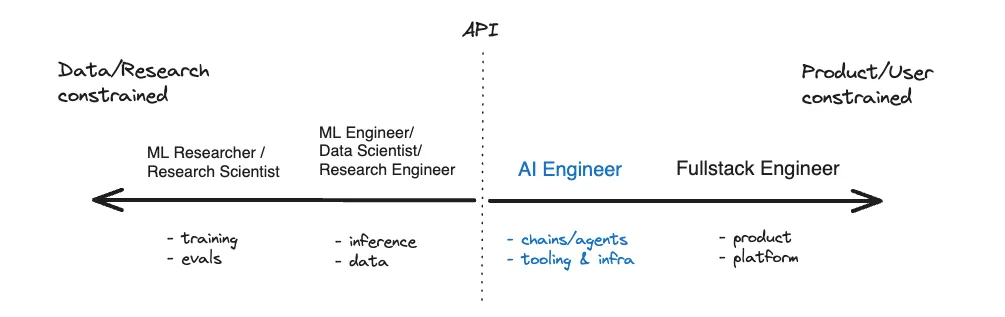

Wang bases his thesis on the fact that there has been a profound transformation in the AI landscape, thanks to the accessibility of advanced models through APIs or, as he calls it, a once-in-a-generation “shift right” of applied AI.

Bridge the gap between AI research and application

A wide range of AI tasks that took 5 years and a research team to accomplish in 2013 now require API docs and a spare afternoon in 2023.

But Wang is not one of the emerging prophets saying AI will replace developers in writing code and that all of them will have no choice but to become Prompt Engineers. The job of AI engineers will be to bridge the gap between AI research and application to deal with all the challenges in evaluating, applying, and productizing AI:

- Evaluating models

- Finding the right tools

- Staying on top of the research and news

And, according to Wang, it’s a full-time job!

Not trained in ML, but ship AI products

AI Engineers can be found everywhere, from the largest companies like Microsoft and Google to leading-edge startups like Figma, Vercel, and Notion to independent hackers. They don’t do research and are not trained in Machine Learning; some of them may not even be fluent in Python but are still using AI advancements to launch products used by millions in record time.

When it comes to shipping AI products, you want engineers, not researchers.

Illustration: Shawn Wang

The birth of the AI engineer is fueled by:

Basic models and their possibilities

Specific capabilities of the underlying models allow them to generalize beyond what they are trained to do. In other words, the people training the models are unaware of their capabilities.

Non-LLM researchers have the opportunity to find and take advantage of these greater capabilities just by spending more time with the models and applying them to areas that are often undervalued (e.g., Jasper for copywriting).

- There are not enough researchers

Big tech and their big research labs have exhausted the talent to deliver us “AI Research as a Service” APIs. You can’t hire them, but you can rent them. There are about 5,000 LLM researchers in the world but about 50 million software engineers, Wang adds, and such supply constraints dictate that a “middle” class of artificial intelligence engineers will be created to meet the demand.

- The models have nothing to run on

GPU resources are critical to training and running deep learning models efficiently. Many startups and companies are investing in GPU clusters to support their internal AI initiatives, but there aren’t enough GPUs for everyone (nor are they cheap). These circumstances mean it will make more sense for AI engineers to excel in using existing AI models rather than training new ones.

- Fire, ready, aim

Instead of asking ML scientists and engineers to do painstaking data collection to train a model for a specific domain and verify that it is production-worthy, a product manager or software engineer can query an LLM like ChatGPT to build or validate the product idea even before receiving specific data for training.

- Programming languages

The data/AI industry is mainly focused on Python, and AI engineering tools like LangChain and Guardrails came from the same community. However, just as many JavaScript developers in the dev sphere are finally getting their own AI tools (e.g., LangChain.js and Transformers.js), thus expanding the appeal of AI development to other dev communities.

- Generative AI vs. Classifier ML

Generative AI is slowly losing its importance. While the current generation of ML engineers focused on security, fraud prevention, recommendation systems, anomaly detection, classification, etc. – the new generation of AI engineers will focus on application development, personalization of learning tools, and development of visual programming languages.

The software 3.0 era is here

In the so-called software 2.0 era, programmers relied on detailed, hand-crafted programming languages that precisely defined logic. However, Software 3.0 marks a significant shift, as it relies on neural networks taught to approximate logic, Shawn explained. This change enables new generations of software to tackle more complex and intricate problems previously challenging for human programmers.

The current debate on human vs. AI-generated code and if software engineers will become obsolete or not missed the point, according to Wang:

As human Engineers learn to harness AI, AIs will increasingly do Engineering as well, until a distant future when we look up one day and can no longer tell the difference.